Cassie the bipedal robot recently earned a spot in the Guinness Book of World Records for being the fastest two-legged robot on Earth, running the 100-meter dash in just under 25 seconds. The feat is especially impressive, considering Cassie pulled it off blind, without an onboard camera. Instead, Cassie first learned how to run through a series of “sim-to-real” training sessions. Created under the collaborative guidance of Jonathan Hurst and Alan Fern, along with efforts from several bright students over a three-year period, Oregon State University’s sim-to-real machine learning methods have enabled Cassie to benefit from millions of parallel-processed simulations before deployment. All that preparation ensures the robot is ready for any given task, which can include many variables, both known and unknown. Cassie has already learned how to run, hop, skip, and climb stairs, among other things.

With just two legs, Cassie’s functions are limited. The next-generation version, named Digit, will include a torso, arms, hands, and a head. Like Cassie, Digit is a product of Oregon State spinoff company Agility Robotics, which Hurst also heads. Digit will dramatically increase functionality. It will be much more humanoid in both shape and intention. There will be many new opportunities for sim-to-real training to go to work here, as Oregon State is on order to receive a Digit in the next couple of months.

“The key point is that sim-to-real — which is teaching a system to do jobs and tasks, versus traditional programming — applies much more widely than legged robotics,” Fern said. “It’s about creating a simulator where you can practice doing something. A learning program, where the practice of an equivalent of years of experience can take place very fast, in a computer, and then allow for the task to be safely completed.”

This represents a radical departure from the notion of trying to program a set of rules to dictate a desired action, Fern says.

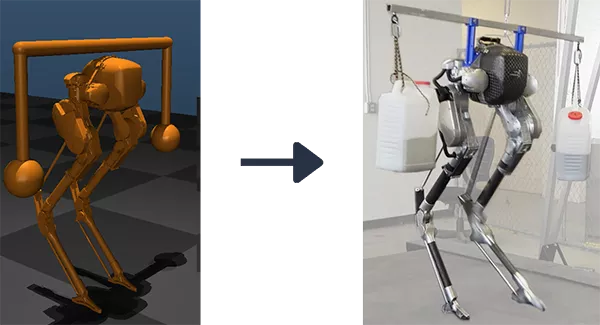

then executed in the real world (right).

“That’s an approach that doesn’t work, and it isn’t scalable,” he said. “The key is to program computers to learn, and then you figure out how to train them. One way is through simulation, although simulation will never be a perfect reflection of the real world. So, we always put in random variations to make the simulations more robust.”

As for where things are headed in workforce applications, Fern envisions a future where robots train to maintain balance through physical tasks that involve variable forces beyond the robot itself, such as carrying loads or pulling carts. He can imagine a team of robots at a construction site under the command of a single operator, a nonexpert at that. He also envisions robots in homes, performing basic tasks, as costs come down. One application could be performing duties to enable older adults to live more independently.

As for current challenges, the biggest hurdle for robots is mastering the ability to navigate their environment. Although many types of robots are already deployed in industry, a human operator is often needed, and the environment is custom-fit. Such an approach won’t work in homes, which are all different. It would be cost-prohibitive to retrofit a warehouse, let alone a single-family house, specifically for a robot. So, we need robots that can adapt, understand variables, and adjust as needed. While advancements in the training of robots like Cassie (and soon, Digit) have been awe-inspiring as of late, Fern hopes to see much more progress over the next five to 10 years, as artificial intelligence comes of age.

If you’re interested in connecting with the AI and Robotics Program for hiring and collaborative projects, please contact AI-OSU@oregonstate.edu.

Subscribe to AI @ Oregon State