Xiaoli Fern, associate professor in computer science, explains how she and her team are using machine learning and bird song recordings to help biologists track bird populations. Plus, Geoff Hollinger, associate professor of mechanical engineering, is teaching underwater robots to use human preferences to take on risk as they complete their scientific missions.

Transcript

[MUSIC: Eyes Closed Audio, The Ether Bunny, used with permissions of a Creative Commons license.]

NARRATOR: From the College of Engineering at Oregon State University, this is Engineering Out Loud.

[birds chirping]

KRISTA KLINKHAMMER: Most of us can easily identify at least a handful of birds by the sounds they make.

[crow cawing]

KLINKHAMMER: A common crow.

[Barred Owl hoot]

KLINKHAMMER: A Barred Owl.

[ducks quacking]

KLINKHAMMER: ducks. other's recognize many more.

[Chestnut-backed Chickadee chirping]

XIAOLI FERN: this is a Chestnut-backed chickadee

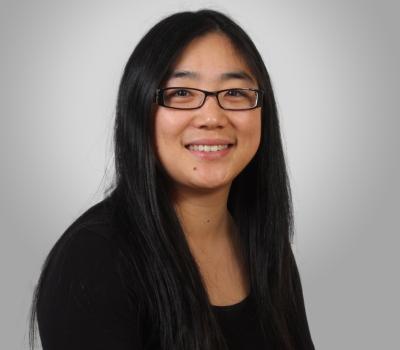

KLINKHAMMER: That's Xiaoli Fern, an associate professor in computer science. She's not one of those people who can rattle off bird species after listening to their calls. that's a little surprising because bird songs have been at the center of her research for years. Here's a little secret: she recognized the chickadee after looking at a spectrogram, a graphic representation of its song.

FERN: Because we're not birders, so the songs are very hard for us to actually recognize so we turned them into those visual pictures and then we recognize those pictures. Humans are much better at processing visual information, and it turns out, computers too.

KLINKHAMMER: I'm Krista Klinkhammer with the College of Engineering and in this episode of Engineering Out Loud, we'll begin with a look at an intriguing project undertaken by Fern and her colleagues to teach computers to decipher bird songs.

FERN: So this is for the purpose of monitoring a species of birds. There's lots of interesting things about birds. Birds is what they call indicator species. They actually tell you a lot about the environment, because it's influenced by many, many things. It's the right spot on the trophic chain. So, what birds are where, tell you a lot about the environment. And that's where our system comes in.

KLINKHAMMER: The work could also influence environmental policies.

FERN: For example, how do you want to protect the habitat, so that can be based on the data that we collect.

KLINKHAMMER: it begins with relatively simply devices called song meters, which the researchers place at various locations in the A.H. Andrews Forest [Editor's Correction: H.J. Andrews Experimental Forest) northeast of Eugene, Oregon.

FERN: We have microphones, those are fairly low-tech microphones--nothing fancy like what we're using right now. They're weatherproof and they're placed in the forest at specific sites that they're collecting data during the breeding season 24/7.

KLINKHAMMER: Automating data collection offers clear benefits over the old-fashioned way of field monitoring.

FERN: To generate this kind of data, to know where birds are, typically they will have to send human field assistants to the field, actually, at set times. They go visit sites and they look around and decide what species they see. So it's a very manual and tedious process and it's very incomplete. They just can't be there 24/7 like a microphone can do. And a lot of times you can only send them to very close by regions, so you don't get very good sample from remote regions and hard to reach regions. And that gives us a lot more complete, you know, understanding of the distribution of birds both in terms of time and also location.

KLINKHAMMER: The volume of data speaks to the efficiency of relying on automated recording equipment. For example, in one study that Fern and her colleagues published in 2016 the song meters recorded nearly 2,600 hours of audio in the forest. Since they began collecting data in 2009, the size of their data set has grown to somewhere between 20-40 terrabytes.

FERN: You can drop a recording device or box at a very remote location and leave there record for several month and then collect it and then we can analyze all those data and tell you what birds are there. You can not possibly send a person there for four or five month and then just record tell you when you hear the birds, when you see them. So, the ability to get this kind of data with human, manual monitoring just not possible.

KLINKHAMMER: All the audio files are converted to spectrograms, which are encoded into numerical arrays that the computer arrays can read. An algorithm developed by Fern and her research group then sifts through the vast amount of spectrographic data to make sens of it all. But the computer needs a little help from humans.

FERN: We collect bird-song recordings, typically we chunk them into shorter chunks, 10-15 seconds chunks, and then we have expert birders, they listen to the recording to tell us what birds they hear.

KLINKHAMMER: This is the only time in the entire process where the audio files themselves, instead of spectrographic data, play an important role.

FERN: We have several hundreds of those recordings that are carefully listened and carefully labeled so that we know what species are in those recordings. And the learning system comes in to analyze those recordings and, based on the label, they learn to actually recognize those in the future. To train the machine learning system, we need to have some of those audio recordings labeled by experts to tell us, you know, this recording tells us what species, and based on this information then our system will learn from that. And once we learn this prediction system, we can apply this predictions system to future recordings that we collect in the forest to identify what species are there.

[Varied Thrush chirping]

KLINKHAMMER: That's a Varied Thrush, one of the 20 or so birds that Fern is teaching the computer to recognize. If all the original audio recordings were so clear, her job would be much simpler, but that crisp sound file is courtesy of the Cornell Lab of Ornithology. A typical audio clip recorded in the Andrews Forest sounds something like this.

[nature recording with mixed sounds, slightly muffled]

FERN: Lots of background noise. We have the stream that's pretty constant during the breeding season. And we also have rain. The raindrops can actually, basically mask out a lot of things in the recording. And there's also other animal noise. It just messes up how we recognize things, because we are trained to recognize certain things and when there are things we haven't heard before pop up they kind of confuse the system. You know, the birds, they're not waiting turns. They sing on top of each other. The recordings are full of noise and also there are multiple species in the same recording.

KLINKHAMMER: Despite the challenges, the results so far are impressive.

FERN: Right now, we're making prediction and we're pretty reliable making prediction at a recording level--give you a recording, like what species are in there. So that level, our accuracy is very competitive--it's over 90% for many of the species.

KLINKHAMMER: Fern also makes it clear that the system is not intended to produce an exact bird census. That's an unrealistic goal no matter what approach is used.

FERN: The hope, the reason that we believe it would work is because, in aggregate, we're not talking about a single bird, right. We're not talking about just one bird, two birds, we're talking about in aggregate. We're gonna not just take one instant, like one particular half-an-hour segment, say how many birds are there? Because that's not gonna be very reliable depending on the birds particular activity during that half-an-hour. That half-an-hour the bird may not be there, right. It's more aggregating over long period of time, for example, a whole week or a whole month, how much you see. So for three particularly noisy bird, they might be noisy during that particular period, but they might be quiet during other time, and, in long run, things will average out. That's pretty much all we can ask for, even with human monitoring. So you have no way of knowing the grand truth.

KLINKHAMMER: That grand truth may indeed be allusive, but Fern has come to terms with a much smaller truth: despite her years of researching birds and the sounds they make, she's still not so good at identifying her subjects from her songs.

BOTH: laughing

FERN: We're getting better at recognizing those patterns. But if you play a song...

KLINKHAMMER: If you're out for a hike, you can't be like oh...

BOTH: laughing

FERN: No!

KLINKHAMMER: Oh, that's a Varied thrush, No?

FERN: It doesn't help me. It doesn't help me, because we mostly work with images.

KLINKHAMMER: Oh, that's too bad.

[MUSIC: Eyes Closed Audio, The Ether Bunny, used with permissions of a Creative Commons license.]

KLINKHAMMER: Thank you for tuning into Engineering Out Loud to explore how computers and humans can work together to learn about bird songs and understand a bit more about the link between bird populations and the health of the environment. In the next part of this episode, we'll head underwater for a different take on machine learning. Stay tuned.

[birds chirping, while music plays]

[ocean noises, water lapping along shoreline]

STEVE FRANDZEL: Imagine a submarine

[submarine sonar pinging]

FRANDZEL: Not that kind of submarine. Think smaller.

[boat motor]

FRANDZEL: Even smaller, much smaller – a submarine that fits inside the back of a pick-up truck.

[smaller boat motor]

FRANDZEL: That’s more like it. Make it yellow. Now think of a robot

[TV CLIP (Lost in Space): Danger Will Robinson!]

FRANDZEL: Sorry, it doesn’t talk

[mechanical robot sounds]

FRANDZEL: Sorry, no arms or legs either

GEOFF HOLLINGER: People always think they’re really funny, because when you carry the robot around they say ‘Oh that looks like a torpedo,’ but they don’t realize we’ve heard that a million times before.

FRANDZEL: That’s Geoff Hollinger, assistant professor of mechanical engineering and robotics, describing a robotic submarine that does, in fact, resemble a miniature torpedo, and some of which are yellow. It’s called an AUV.

HOLLINGER: AUV stands for autonomous underwater vehicle.

FRANDZEL: In part two of this episode of Engineering Out Loud, join me, Steve Frandzel from the College of Engineering, as we dive below the waves for a closer look at these remarkable little machines and learn more about Hollinger’s innovative work to expand the role of AUVs in unlocking the ocean’s secrets. Let’s put this thing all together: An AUV can cruise under water for a long time, so it’s definitely a submarine. And it’s a robot by any standard definition – a machine that carries out complex actions automatically.

HOLLINGER: AUVs have a number of applications that go from monitoring the ocean to gathering environmental data for oceanographers and ecologists, also inspection, maintenance and manipulation in marine renewable energy arrays and oil rigs.

FRANDZEL: They’re also used to assess submerged pipelines and cables, explore archaeological sites, locate navigation hazards, identify unstable sections of the sea bed, search for shipwrecks, map the sea floor, measure water temperature and salinity, find and destroy underwater explosives, measure light absorption and reflection, locate missing airplanes, explore geologic formations. You get the picture. Before AUVs came onto the scene, ocean researchers relied on surface ships to reach their destinations.

HOLLINGER: A lot of oceanographic data collection comes from surface ships that are going back and forth in effectively a zig-zag pattern. Running large ships can be very expensive, as much as $10,000 a day for the bigger ones. And if they go out regularly, obviously those costs really add up. But an AUV, the ocean going ones, typically cost about $100,000 apiece, which is the equivalent of only about 10 days of ship time. Yet, these AUVs can go out for months and have lifespans of years – 10 years, sometimes decades or more. That allows us to do continuous monitoring in a way that wasn’t possible before. So what we can do are things like track biological hotspots or find locations that are particularly interesting, then stick around for long periods of time to see how they change and evolve. You really can’t be there with ships, you can’t be there all the time. You have to go back and refuel and restock and get new crew, and it’s expensive to be out there in the first place. AUVs open up the possibility of getting all kinds of data that oceanographers just didn’t have before.

FRANDZEL: So they’re versatile and their cheap, and they come in a variety of designs.

HOLLINGER: One example of an AUV that’s commonly used these days by the navy and oceanographers is the Slocum Glider. This is a buoyancy-driven vehicle that can go out for weeks at a time and gather data about things like temperature and salinity, chlorophyll, dissolved oxygen, and various other factors that are going to be useful for scientists and for understanding the marine environment.

FRANDZEL: The Slocum Glider weighs about a hundred pounds and cruises leisurely at about a mile or two an hour. There are much larger and faster AUVs, but few have the glider’s ability to sortie for such a long time, surfacing periodically to check its position, receive instructions, and transmit scads of data that it’s collected through various sensors. But data quantity, while important, is only part of the story.

HOLLINGER: Our work is about getting better data. So when an AUV goes out there, it’s gathering data for the oceanographer, and we want it to gather data that is the most useful. So this is part of data science, this is part of big data. You can have large quantities of data that might not even be useful. If I just have the vehicle sit in one place and gather data, that’s not going to be particularly useful. But if I get the right data, that makes the data analytics easier, because now you can learn things from that data, you can infer patterns.

FRANDZEL: But because they’re so small and slow, AUVs voyage into harm’s way every time they head out into the dynamic and unforgiving aquatic environment.

HOLLINGER: Oceanographers have various criteria they want to satisfy. They want to reach locations they’re interested in, but they also want the robot to do its work quickly and minimize the risk of getting damaged, like if the AUV surfaces in a busy shipping lane and collides with a larger vessel, or if it encounters currents that push it into rocks or far off course.

FRANDZEL: It comes down to the old conundrum of risk versus reward.

HOLLINGER: As a researcher, I have to decide how important it is for the robot to get exactly to a particular waypoint, how much additional risk I’m willing to take to do that. Am I willing to accept that additional risk, or do I accept the safer trajectory that may not completely fulfill my objectives? It’s the idea that we have multiple competing preferences. What we want to do is develop a system that allows us to capture the tradeoffs that humans have to make in these decisions and use that to do better and more efficient planning with AUVs.

FRANDZEL: Reaching that goal, though, means humans and machines must join forces.

HOLLINGER: Typically humans don’t do a great job of making decisions when we’re buffeted with large amounts of information. Think what your first reaction might be when you see a big string of numbers that represents ocean currents over a large spatial expanse. What can you do with that? Computers, on the other hand, do an excellent job of processing large amounts of data quickly. So if you have an AUV, and you want it to travel a route that’s both safe and also hits all of the important spots where you want to collect data, you’re probably not going to do a great job of plotting it by yourself, because there’s no way for you to evaluate all the possible trajectories and then choose the optimal one. But the AUV doesn’t really know my preferences for gathering data. So this is where we can merge the strengths of the computers and the humans by encoding the preferences of the human and combining those with the big data processing capabilities of the machine.

FRANDZEL: Now we’re getting to the heart of Hollinger’s work: Fusing the human talent for solving complex problems with the computer’s unsurpassed number-crunching power.

HOLLINGER: So we’re building systems where computers, namely AI systems and people, collaborate to make decisions, and we’ve trained AUVs to plan routes that balance data collection goals with risk that mirrors the priorities of their human operators. So oceanographers can send these vehicles out into the ocean, and they’ve been doing it for years and they have lots of expertise, but that expertise is hard to quantify. Until now there’s been no way to code it into an artificial intelligence system. So our main idea is to develop a system that captures the preferences of the human experts that they’ve built up over time, and encoding them into the artificial system, so we can get the best of both worlds. We want a human robot team that can accomplish neither could accomplish alone.

FRANDZEL: To create that teamwork, Hollinger came up with a process of give and take between human and machine.

HOLLINGER: So what we did is we conducted simulations where the computer projects a trajectory for the AUV based on some fundamental mission goals. Next, a human takes a look at that proposed route on the computer screen and modifies it a little bit by dragging a single waypoint to a new position that he or she prefers more. The algorithm that we design sees this and thinks, OK, well, if you did that, here’s how much risk you’re willing to take in exchange for a certain level of reward you get by taking that particular path or meeting those particular objectives. That process is repeated over and over, and each time the computer learns more about the person’s preferences. So every time the path gets changed by the human, the computer recalculates the optimal route for the AUV. That’s how it learns the human’s underlying preferences.

FRANDZEL: The learning algorithm even compensates for the quirkiness of our species.

HOLLINGER: So humans are always making decisions that are out of the ordinary and unpredictable. They may move a single point in a route that indicates a high tolerance for risk, and then make another change that indicates much less tolerance for risk. We argue that our method for human-robot learning deals with that kind of inconsistency in an intelligent way.

FRANDZEL: Hollinger put his system of machine learning to the test in both the lab and with an AUV in a California reservoir. In this case, he used a vehicle similar to the Slocum Glider.

HOLLINGER: The computer simulation showed that it’s possible to design systems for autonomous navigation that capture the essence of the human’s priorities. When we conducted field tests using a six-foot long AUV, The YSI EcoMapper, the robot successfully followed a route that successfully combined water depth of about six meters and water temperature near 27-degree Celsius, which were the value that the human preferred. The results were the same as we had seen in the simulation, so this validated the simulation. This provided some solid evidence that the algorithm had adopted human preferences and adjusted its solutions appropriately.

FRANDZEL: The positive results bode well for the future of AUVs and underwater exploration.

HOLLINGER: So far our ideas work in terms of the scientific principles. If they continue to work in practice, then this will allow a single human to control multiple robots and large-scale systems. The potential data-gathering power we’ll have from these systems that act intelligently, and almost independently, will substantially improve the amount and quality of data we’re bringing back to the oceanographers, which is going to give us a lot of insight into ocean ecosystems and how life survives in the ocean.

FRANDZEL: Thank you for tuning into to Engineering Out Loud. We hope you came away with a greater understanding of machine learning and the impact it has on scientific exploration in the sky and under the water. This episode was produced by Krista Klinkhammer and Steve Frandzel, with additional editing by Mitch Lea. We’d like to thank the Cornell Lab of Ornithology for clips of bird songs. Our intro music is The Ether Bunny by Eyes Closed Audio on SoundCloud and used with permission via a creative commons 3.0 license. For more episodes, visit engineeringoutloud.oregonstate.edu