Eric Slyman builds tools to uncover where artificial intelligence makes mistakes.

Specifically, the Ph.D. student in artificial intelligence and computer science looks at how AI learns social biases. And they’ve built a tool to help AI auditors address it — quickly, accurately and economically.

Bias in AI can show up, for example, when a user asks it to find or create an image of a doctor.

More than likely, the result will be an image of a 30- to 50-year-old white man. Or if the query is if there’s a doctor, and the photo input is a Black woman in a lab coat with a stethoscope, AI’s response would likely be, “No, but there’s a nurse.”

So, where do these stereotypical responses come from?

Slyman explains that it’s a matter of numbers. AI is trained on the internet, making the connection between vision and language from this massive dataset — the images and descriptions uploaded to social media, news outlets and other websites. Those inputs lead AI to problematic outputs, including racism, sexism, ageism, heteronormativity, and other biases that reflect the internet’s dominant culture: white, male and cisgender.

For Slyman, this isn’t just a technical problem. It’s personal.

Slyman is queer, and if they ask for an image of someone wearing makeup or a dress, “I will never see a picture of someone that looks like myself. This technology doesn’t work for people that identify the way I do,” they say. “AI is hard to use when there’s some way that you’re not in the mainstream — and almost everyone has some way in which they’re not in the mainstream.”

The current process for addressing vision and language bias in AI is to rely on auditors, most of whom work in academia, independent AI research institutions or companies like Adobe, Google and Microsoft that are developing AI products. Auditors identify stereotypes such as “men wear suits” by gathering and annotating examples to form large datasets, a process that’s timeconsuming and expensive.

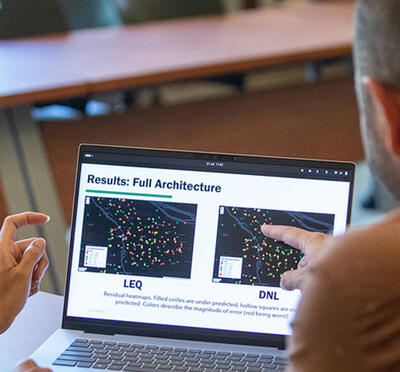

Slyman’s solution is VLSlice, an interactive bias discovery tool that uses AI itself to replace manual data collection, doing in just a few minutes work that would have previously taken weeks. Auditors can query an AI system specifying a subject, such as a photo of a person, then a specific potential bias they want to evaluate, like what types of people represent a CEO. The AI responds with sets of images that might demonstrate a stereotype, for instance, men wearing suits. Auditors can then collaborate back and forth with the AI to gather more supporting evidence, actively teaching the AI how to better identify these biases.

Tests of VLSlice indicate it helps auditors identify biases with greater precision and with less cognitive fatigue, Slyman says. They have made VLSlice open source to encourage more widespread testing and further refinement of the tool.

Slyman recognizes that there’s a race to get AI products into the marketplace and that having a faster, less expensive tool for identifying and correcting AI bias can be a competitive advantage.

They say VLSlice is not just for the Googles of the world but for smaller companies with fewer resources who want to build more accurate, more representative AI technology.

“The more your product works for everybody, the more you’re able to sell it to everybody, and the more money you’re able to make,” Slyman says. “That’s a pretty good incentive.” Or put another way, it’s smart business.

Watch this video to learn more about Eric’s research and watch his Graduate Research Showcase talk from April 2023. Learn more about the Graduate Research Showcase here.

This story originally appeared in Oregon State University’s Office of Institutional Diversity 2024 newsletter.